Scraping an RSS feed to JSON

This tutorial uses Optimus Mine to perform a simple scrape of an RSS feed and store the results in a JSON file on your computer. The goal of this tutorial is to introduce you to the basic concepts of Optimus Mine, as well as the most common configuration parameters.

In this tutorial you will scrape the BBC News RSS feed, extracting the title, description, date & URL of each article and storing the results on your desktop.

Before you begin

If you do not have Optimus Mine installed already, follow the installation guide to download & install Optimus Mine on your machine.

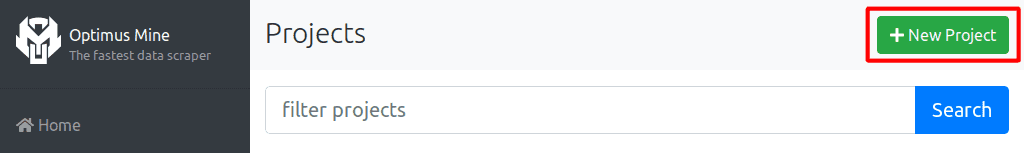

Step one: Create a new project

- Launch the Optimus Mine application.

- On the top right hand side, click New Project.

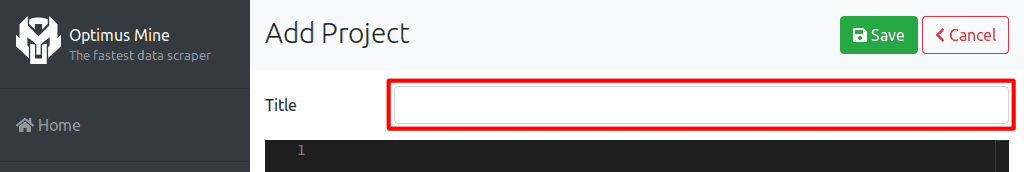

- Enter a name for your new project in the Title field.

Step two: Configure your pipeline

Next you create the pipeline configuration. The following YAML document is used to create the pipeline you will use to scrape the RSS feed and create the JSON file. Copy & paste it into the editor below the title field and hit Save.

defaults:

verbose: true

retry: 10

timeout: 5m

tasks:

- name: Scrape RSS

task: scrape

input: http://feeds.bbci.co.uk/news/rss.xml

engine: xml

schema:

type: array

source: //item

items:

type: object

required: [title, description, date, url]

properties:

title:

type: string

source: ./title

description:

type: string

source: ./description

date:

type: string

source: ./pubDate

filter:

type: date

parse: 'DDD, DD MMM YYYY hh:mm:ss ZZZ'

format: 'YYYY-MM-DD hh:mm:ss'

url:

type: string

source: ./link

- name: Export Data

task: export

engine: file

format: json

path: '{{ userDir }}/Desktop/bbc-news.json'Configuration details

Let’s walk through each of the properties in your configuration to understand what they do.

defaults:

In this scraper we have two tasks, scrape and export. The defaults verbose, retry and timeout

will apply to both tasks scrape and export. Defaults apply to all tasks in your project.

verbose: true

Enables detailed logging which can help you identify problems if your scraper is not working as

intended. You would usually set this to false once your scraper is working.

retry: 10

The scraper will retry HTTP requests 10 times before failing.

timeout: 30s

If a HTTP request has not completed within 30 seconds the scraper will consider it failed.

tasks:

Contains the array of all your pipeline tasks.

The scrape task

name: Scrape RSS

This sets the name of the task to “Scrape RSS”. The name will be displayed in the log messages relating to this task.

task: scrape

This tells Optimus Mine that this task as a scraper, meaning that input values will be treated as

URLs and requested via HTTP.

input: http://feeds.bbci.co.uk/news/rss.xml

The input passes data to your task. In this example it tells the scraper to scrape the URL

http://feeds.bbci.co.uk/news/rss.xml.

engine: xml

Tells the scraper to parse the data as XML, using XPath expressions in source to extract the data.

schema:

The schema contains the information on how to parse & structure the scraped data.

type: array

Setting the root schema type to array tells the task that there are multiple items on each page.

source: //item

Since engine is set to xml, the source parameter contains the XPath expression to the data you

extract. As type is set to array it expects an XPath that selects multiple nodes in the XML

document. In RSS feeds each item is wrapped in an item tag, so this will extract each item node

within the XML document.

items:

Since the schema type is set to array we need to define the schema of each element within the

array.

type: object

Setting type to object tells the parser that each item in the array is a key-value map.

required: [title, description, date, url]

Only elements in the array which contain title, description, date and url will be saved.

properties:

Contains the schema for each key-value pair in the object. The keys nested directly under

properties (i.e. title, description, date & url) define the keys in the outputted data.

type: string

This tells the parser to treat the scraped data as a string of text.

source: ./title, source: ./description, source: ./pubDate, source: ./link

As with source: //item above, these contain the XPath expression to select the data to extract.

Since they start with a . (dot) the parser will only look within the parent node (i.e. //item).

filter:

Filters are used to manipulate data in your pipeline. Here we’ve set type: date to use the date

filter which transforms dates from one format into another. parse: 'DDD, DD MMM YYYY hh:mm:ss ZZZ'

tells the filter to parse a date like Wed, 01 Jul 2020 18:22:16 GMT and

format: 'YYYY-MM-DD hh:mm:ss' tells it to output the date like 2020-07-01 18:22:16.

The export task

name: Export Data

This sets the name of the task to “Export Data”. The name will be displayed in the log messages relating to this task.

task: export

This tells Optimus Mine that this task as an export, meaning that incoming values from previous tasks will be exported.

engine: file

The file engine is used for storing the exported data on your local machine.

format: json

This sets the format of the exported data to JSON.

path: '{{ userDir }}/Desktop/bbc-news.json'

Used to specify the path of the resulting file on your local machine. In this case we’re saving the

data in a file called bbc-news.json on your desktop.

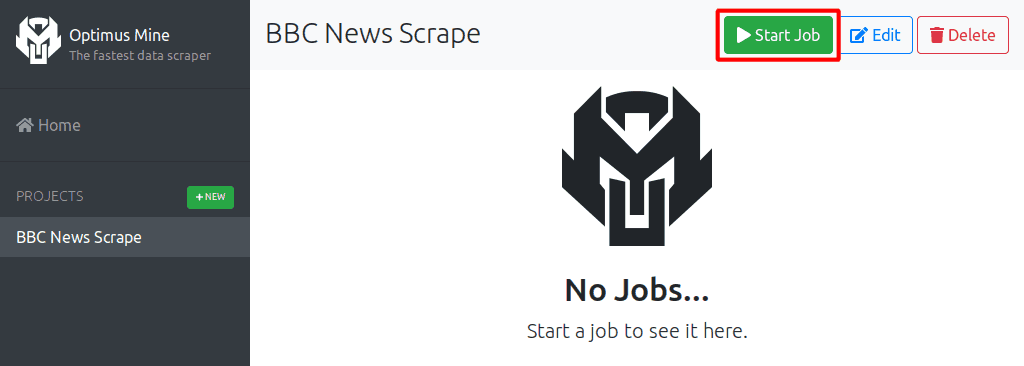

Step three: Run the pipeline

You’re all set. Simply click on Start Job to run the scraper.

Once completed you should see a new file called bbc-news.json on your desktop with the following

kind of data (formatted for readability):

[

{

"date": "2020-07-01 19:04:01",

"description": "Five-year-old Tony Hudgell set a target of raising £500 for the hospital that saved his life.",

"title": "Tony Hudgell raises £1m walking 10km on prosthetic legs",

"url": "https://www.bbc.co.uk/news/uk-53248430"

},

{

"date": "2020-07-01 19:15:05",

"description": "In a surprise message, Harry said people like them gave him the \"greatest hope\" for the future.",

"title": "Duke of Sussex praises young anti-racism activists",

"url": "https://www.bbc.co.uk/news/uk-53255219"

},

{

"date": "2020-07-01 00:37:37",

"description": "The hit comedy plays fast and loose with the contest's rules and Edinburgh's geography.",

"title": "Eight things Will Ferrell's Eurovision movie gets wrong (and two it gets right)",

"url": "https://www.bbc.co.uk/news/entertainment-arts-53217853"

}

// ...

]Whats next

- To learn more about the schema used for parsing data, see the Parsing Data page.

- To learn more about the filters used for manipulating data, see the Filters page.

- To dive into detail about all the other configuration properties, see the Configuration Reference.